Before we can add new features to Coda 2 in the Mac App Store, we must first “Sandbox” it — adhere to a set of Apple guidelines aimed at increasing the security of Mac OS X.

What does this mean, really?

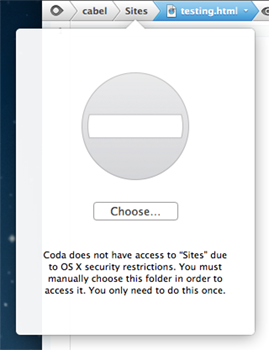

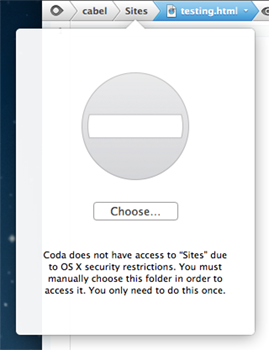

Well, for safety, sandboxing limits an app’s access to your local files until you give the app explicit permission to interact with those files. And once you’ve done this, your permission is remembered in the future. In other words, Coda won’t be able to see most of your local folders until you specifically select them in a traditional “Choose” dialog. The good news? Coda has Sites, and Sites have a Local Path, and once you “Choose” the Local Path when setting up your site, you’ll be able to view that folder and interact with it in the future. The bad news? You’ve got to reset all of your Local Paths, and if you don’t use Sites in Coda (which would be a bit weird) there will be brief bumps.

These changes should only affect the Mac App Store version. And we think most users won’t even notice that anything has changed.

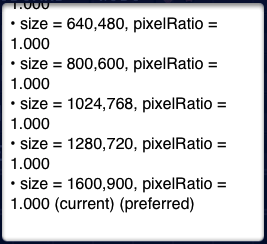

Here’s the full list of what will change, slated for a future Coda release:

1 Local Root

Your site’s “Local Root” will have to be reset. You’ll be prompted to do this the first time you try to connect.

You only have to do this once for each of your sites!

You only have to do this once for each of your sites!

2 Go To Folder

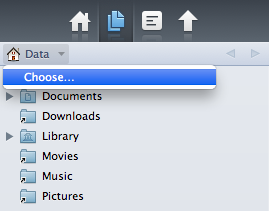

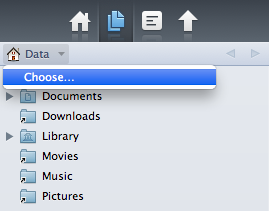

It will no longer be possible to “Go To” any local path by typing it in. “Go To Folder” on a Local path will now bring down a traditional “Choose” panel.

3 Path History

3 Path History

In the Sidebar and the Files browser, the “Path” pop-up can no longer show anything above your defined Local Root. To go above your Local Root, you’ll have to use Choose.

If you’re not working in a Site, you will land in a generic sandboxed home directory, and must Choose another folder to continue.

You only need to “Choose” a folder once!

You only need to “Choose” a folder once!

4 Path Bar Browsers

4 Path Bar Browsers

If you click on a folder outside of your Local Root, you have to manually choose the folder via Choose panel.

You only need to “Choose” a folder once!

You only need to “Choose” a folder once!

5 Saving Files

It’s no longer possible to Save files you don’t have write access to, and Coda is no longer able to offer an authorization dialog to permit this behavior.

This includes any files you don’t own and don’t have proper permissions to write, such as files owned by a “web” process.

This is also an App Store restriction.

This is also an App Store restriction.

6 Get Info

It’s no longer possible to change permissions of files that require Administrator/Root access from Coda’s Get Info window.

You’ll have to switch to the Finder and adjust permissions there before editing these items.

This is also an App Store restriction.

This is also an App Store restriction.

7 Places

Any Local places will be cleared during the upgrade, and will need to be recreated, once.

Note: Places are defined per computer, so they will need to be reset on each computer Coda is used on.

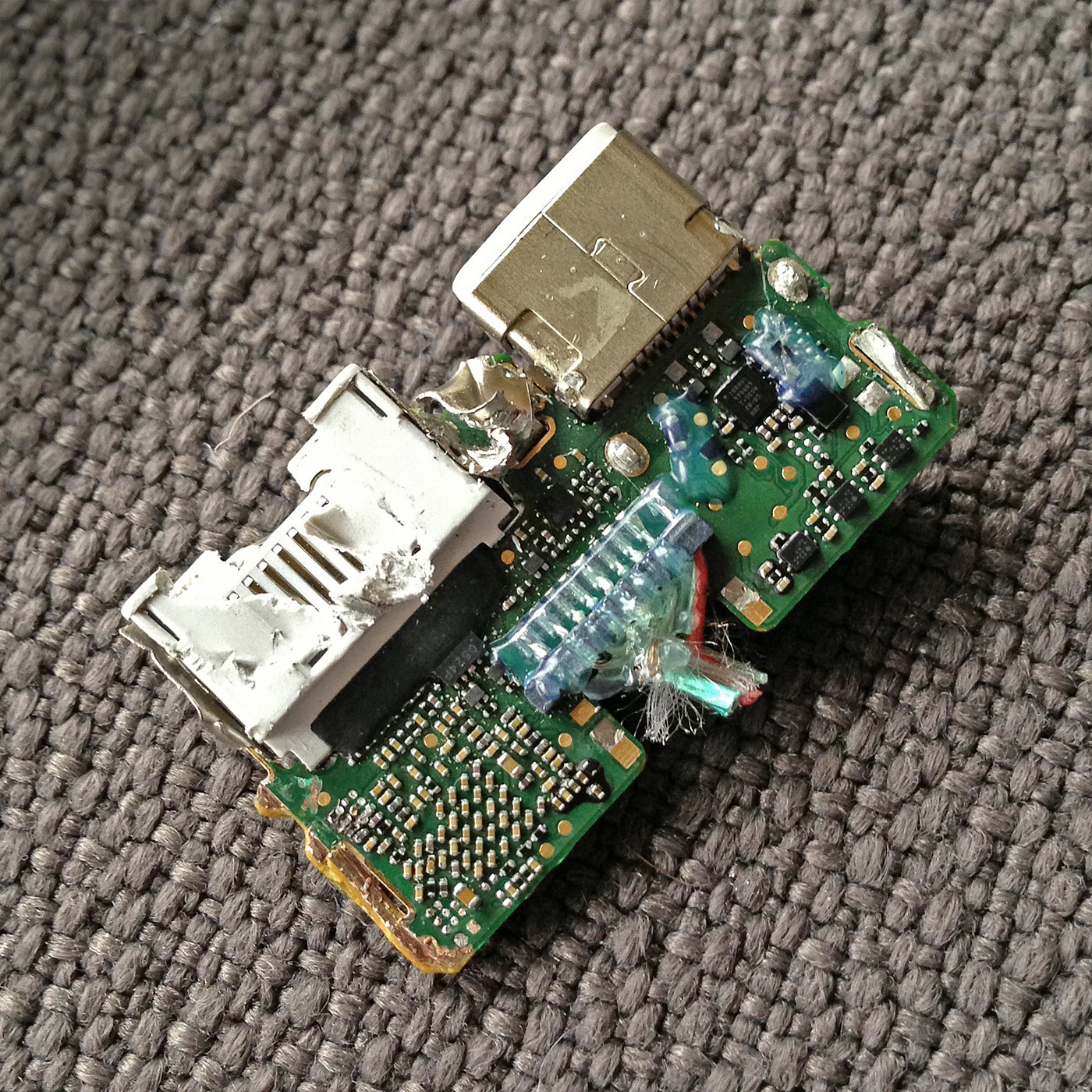

8 SVN and GIT

Tool paths may need to be reset depending on their location on your computer.

9 Local Shell

Coda will no longer be able to open a direct local shell/terminal. (You could always turn on Remote Login in Sharing preferences, and connect through that.)

That’s it. What do you think?

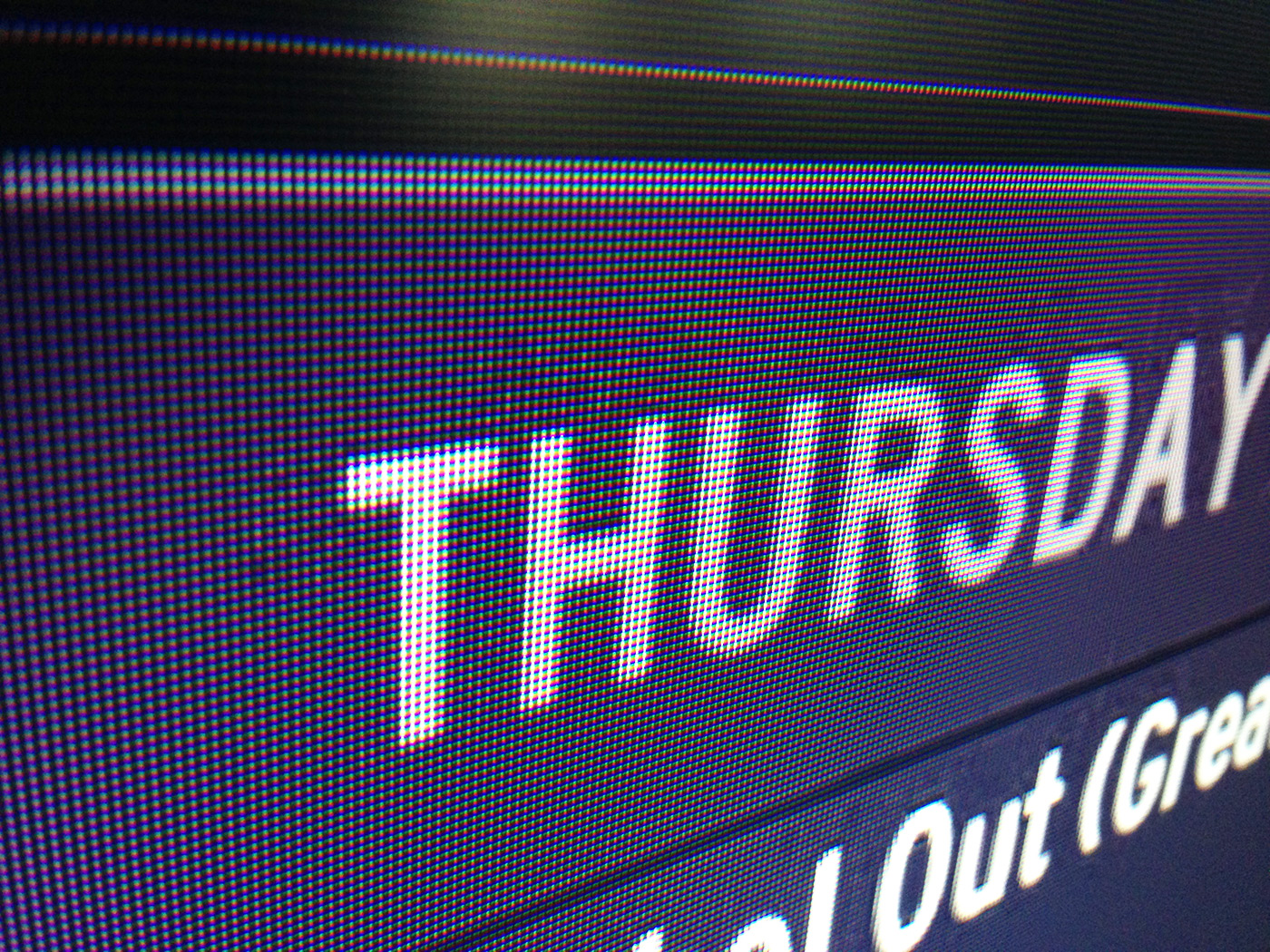

For the truly curious we’ve put together a special Coda 2 build with these changes.

Experimental

If you wish to try Coda Sandboxing Test, it’s critical you understand this build is experimental and beta-quality. You must back up your system first.

Also, you must be currently using Coda 2.0.6 or higher. And if you’re using the Mac App Store + iCloud version of Coda 2, you must first turn off iCloud Sync in your current Coda, before launching this build.

Got that? Download the build here. (50 MB .zip)

We don’t have a timeline on this release, but we’re curious to know your general thoughts on Coda 2 and Sandboxing. Once again, we do not think these changes will affect most people, but we’d love it if you could please take this survey:

[polldaddy survey=”CE7F658FF4C50ABA” type=”button” title=”Take Our Survey!” style=”inline” text_color=”000000″]

Thanks for reading, and thanks for using Coda 2. We’re excited to finish sandboxing and start work on more new, awesome things!